Christian Raymond

Applied Research Scientist

I'm a senior applied scientist at Oracle, where I work on applying large language models to challenging problems in healthcare. Previously, I worked at Amazon, developing a foundation model for 3D computer vision. I completed my PhD at Victoria University of Wellington in New Zealand, where my research focused on meta-learning loss functions for deep neural networks. My current research interests include meta-learning, meta-optimization, hyperparameter optimization, few-shot learning, and continual learning.

Work Experience

Senior Applied Scientist — Oracle

Scientist at Oracle Health and AI (OHAI) working on natural language processing and reinforcement learning, developing models that support and streamline healthcare.

Applied Scientist — Amazon

Internship in the AU computer vision team, automating the labor-intensive process of creating 3D assets for Amazon’s storefront by designing and implementing a foundation model for 3D geometry and material generation.

Recent Publications

PhD Thesis 2025

🏆 Doctoral Deans List

Meta-Learning Loss Functions for Deep Neural Networks

Christian Raymond

In my thesis, I challenged the conventional approach of manually designing and selecting loss functions. Instead, I showed how meta-learning could be leveraged to automatically infer the loss function directly from the data, yielding fundamental improvements in the generalization and training efficiency across a range of deep learning models.

Preprint 2024

Meta-Learning Neural Procedural Biases

Christian Raymond, Qi Chen, Bing Xue, Mengjie Zhang

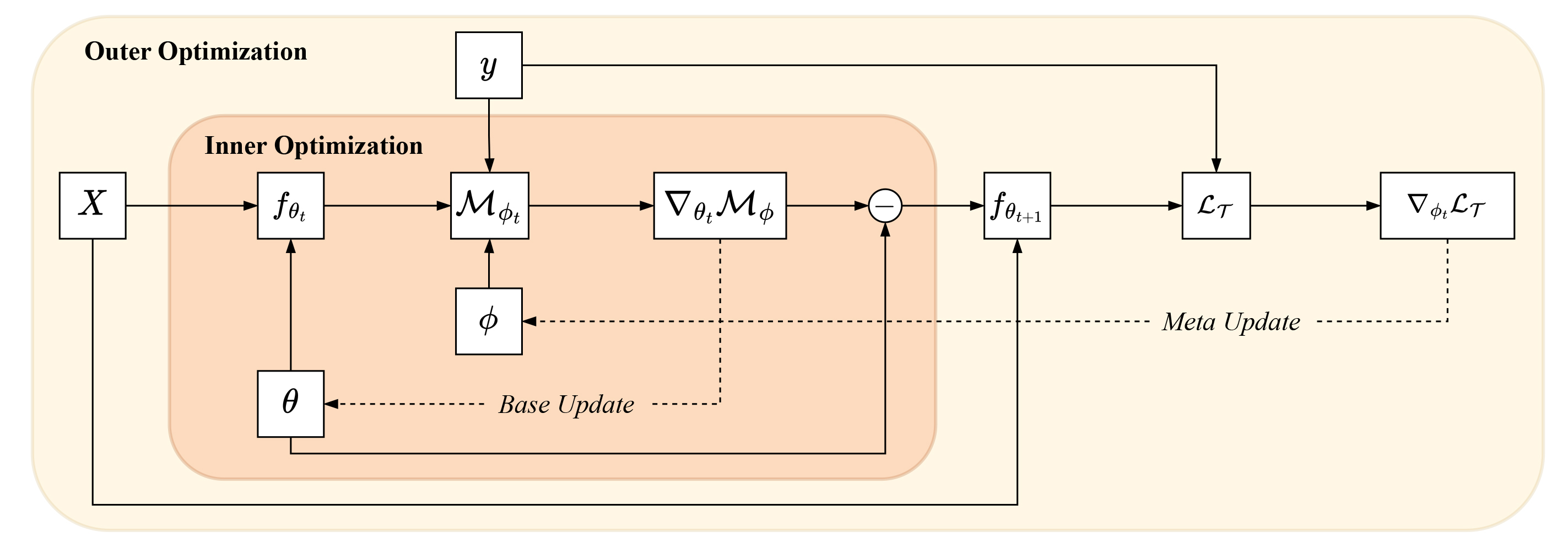

In this paper, we develop Neural Procedural Bias Meta-Learning (NPBML) a task adaptive method for simultaneously meta-learning the parameter initialization, optimizer, and loss function. The results show that by meta-learning the procedural biases of neural networks we can induce robust inductive biases into our learning algorithm, thereby tailoring it towards a specific distribution of learning tasks.

TMLR 2025

Meta-Learning Adaptive Loss Functions

Christian Raymond, Qi Chen, Bing Xue, Mengjie Zhang

In this paper, we develop a method called Adaptive Loss Function Learning (AdaLFL) for meta-learning adaptive loss functions that evolve throughout the learning process. In contrast to prior loss function learning techniques that meta-learn static loss functions, our method instead meta-learns an adaptive loss function that updates the parameters of the loss function in lockstep with the parameters of the base model.

TPAMI 2023

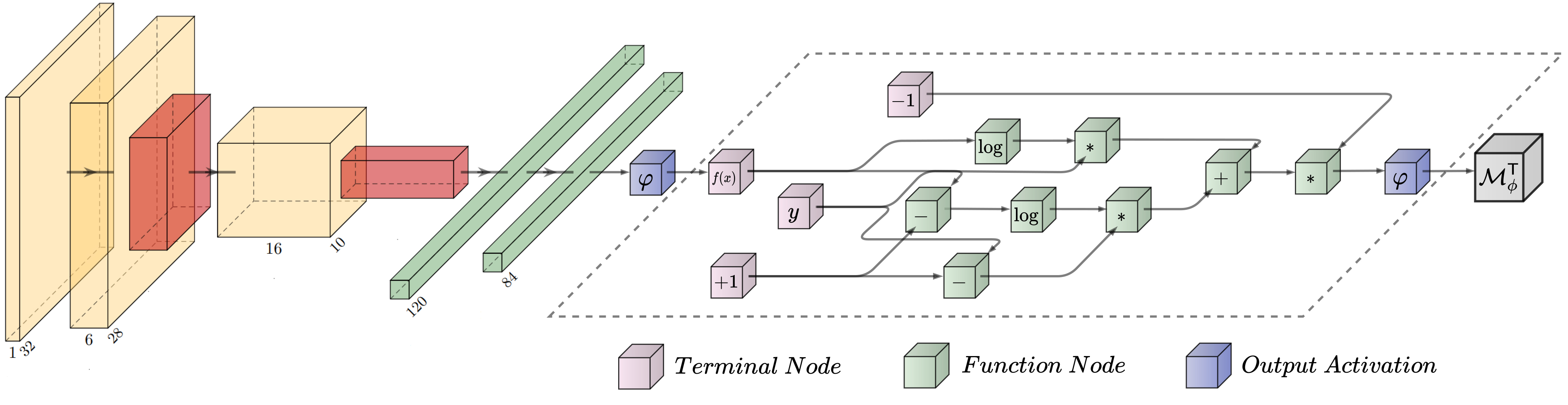

Learning Symbolic Model-Agnostic Loss Functions via Meta-Learning

Christian Raymond, Qi Chen, Bing Xue, Mengjie Zhang

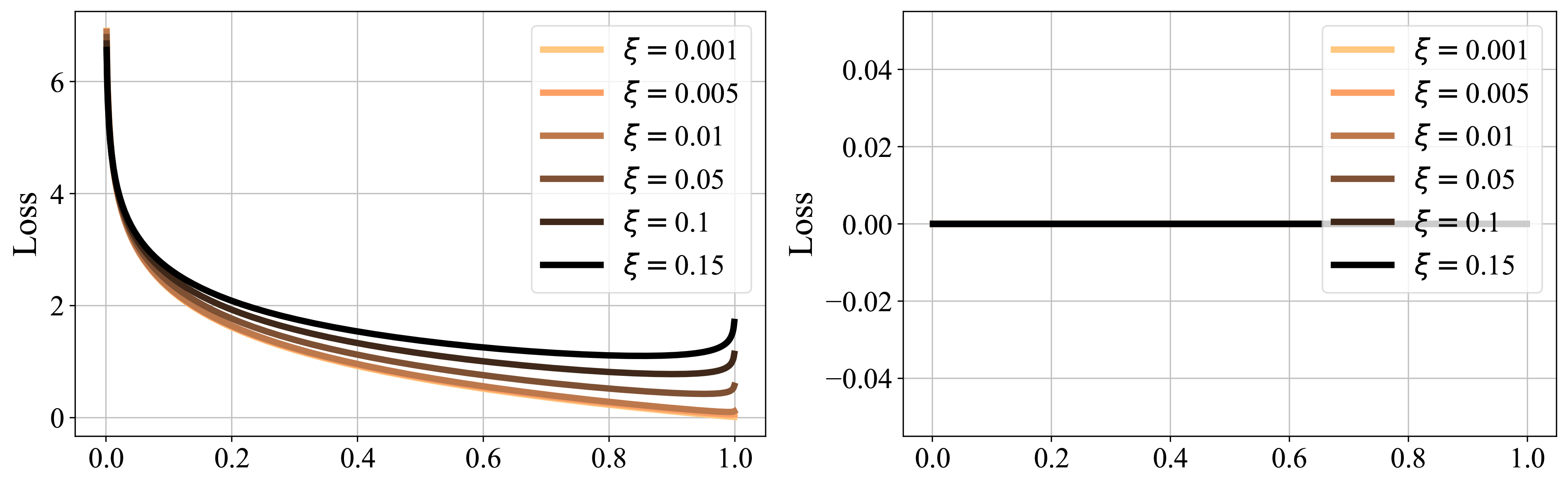

In this paper, we propose Evolved Model-Agnostic Loss (EvoMAL), a novel meta-learning method for discovering symbolic, interpretable loss functions. EvoMAL combines evolution-based search with gradient-based optimization, enabling efficient training on commodity hardware. We also present a theoretical analysis of meta-learned loss functions, which motivates a new label smoothing technique called Sparse Label Smoothing Regularization (SparseLSR).

GECCO 2023

🏆 Nominated for Best Paper

Local-Search for Symbolic Loss Function Learning

Christian Raymond, Qi Chen, Bing Xue, Mengjie Zhang

In this paper, we propose a meta-learning framework for loss function learning that combines evolutionary symbolic search with gradient-based local optimization. The framework first discovers symbolic loss functions using genetic programming, then refines them by solving a bilevel optimization problem via unrolled differentiation.

Education

Victoria University of Wellington

Doctor of Philosophy (PhD) in Artificial Intelligence

Thesis: Meta-Learning Loss Functions for Deep Neural Networks

Advisors: Dr Qi Chen, Prof Bing Xue, and Prof Mengjie Zhang

Victoria University of Wellington

Bachelor of Science Honours (BSc Hons); First Class; Majoring in Computer Science specialising in Artificial Intelligence

Victoria University of Wellington

Bachelor of Science (BSc); Triple majoring in: Computer Science, Information Systems, and Philosophy